PCIe MSI Interrupt latency on x86

I have been using MSI / MSI-X interrupts for quite some time for my FPGA-Based designs with plenty of various DMAs. They have been serving well over the years and I have never encountered any errors except for rare cases where I accidentaly screwed up something myself. Quite recently, we opened up an interesting topic on their latency. Clearly, I wasnt sure what to really expect and I dont usually care that much,whether an interrupt arrives in 1 us or 1ms. After all, its the purpose of the interrupt to disturb the processor once some work is completed.

It may happen though that some latency-sensitive applications might require the interrupt to arrive in some “Reasonable time”. Here is however the problem. Due to the MSI’s implementation, they are quite identical to any other standard data packets traversing across the PCIe topology with just a single exception to make them distinguishable: They target the Interrupt controller instead of RAM. There are no guarantees though that some TLP packet will arrive in some well-predefined time. It might happen for instance that due to high bit-error-rate on the PCIe link, the packet might be re-transmitted several times before reaching the destination. Some switches might also prioritize traffic from other devices depending on the TC / VC settings of the TLP.

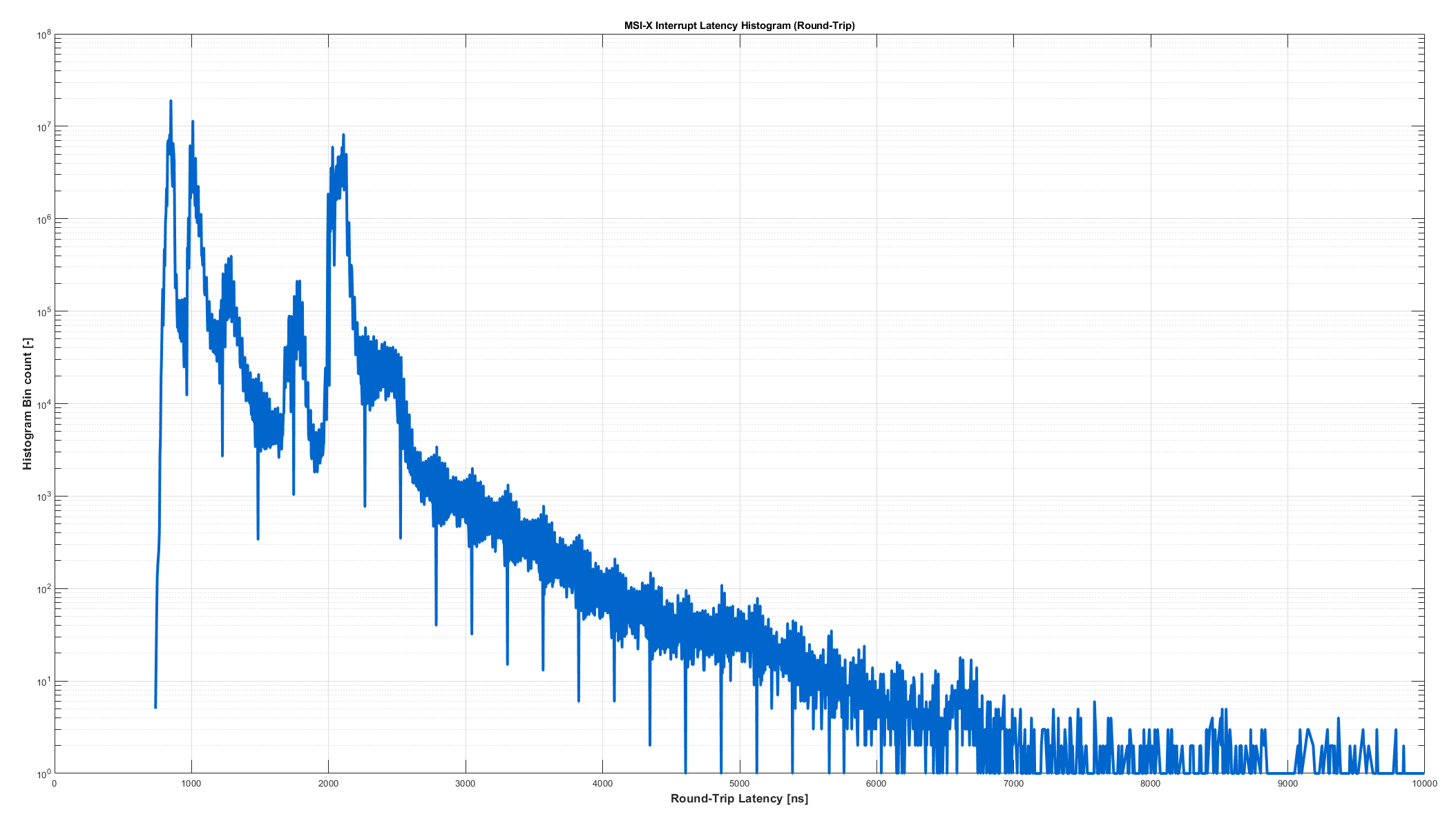

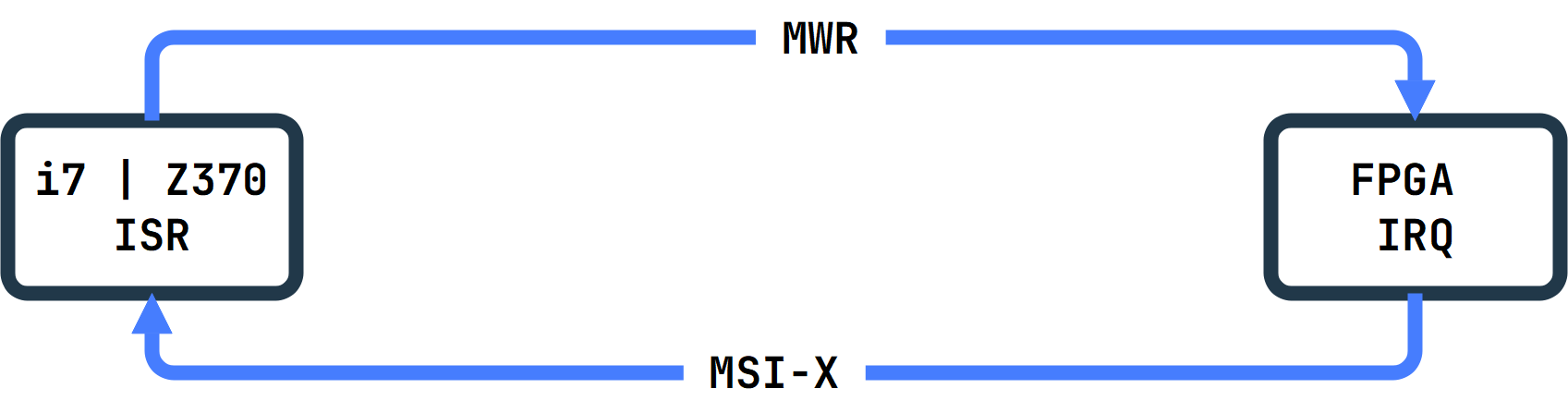

Nevertheless, I have decided to test the latency myself on my old platform with 8th Intel Core i7 Processor, Z370 Platform and OpenSUSE 15.6 linux distribution. The setup that I have used was a KCU116 development board with PCIe x8 Gen 3.0 interface. The timer was running on 250MHz with a clock period of 4ns (IE The resolution of the measurement). The exact measurement of the interrupt latency is however not an easy task as both the PC and the FPGA use different time bases. Their sycnhronization would be quite difficult if not even possible. Therefore, I have measured the time it takes since the assertion of the interrupt at the FPGA, until the ISR at the host returns write data back to the FPGA. IE, effectively measuring an entire round-trip delay from FPGA to RC and then back from RC to the FPGA.

ISR Routine

The histogram, that you see was created directly in a Linux’s ISR and consists of 256M samples. The System was idle and there was no other traffic on the PCIe, that would affect the latency somehow. For better readability, the y-axis is in logarithmic scale. The only uncertainty is related to the register accesses within the FPGA, which might take some time and my best guess is likely ~40ns. IE the entire chart should be left-shifted by 40 ns. As you can see, most of the interrupts finish within ~ 2.5us (Its still a round-trip), however even 7 us latencies are still quite possible to achieve.

During this measurement, only 2 out of the 256M samples had a latency of 10 or more µ seconds (The histogram was manually adjusted to show only up to 7us). I have also made sure to double-check the functionality with the integrated chipscope and indeed, the IRQ-ACK had a turn-around time of approximately ~500 clock cycles (2 us). Note howeve that the MSI / MSI-X latency might be influcenced by various other things, to name a few:

- PCIe Traffic (Write from FPGA to RC or RD from RC to FPGA)

- PCIe Generation and link width

- PCIe Link’s Bit-Error Rate (BER / SER)

- System Topology and amount of PCIe-Switches in between

- CPU/OS Scheduling, afinity, NUMA topology Etc.