Real time Julia and Mandelbrot set Visualization

Fractal set introduction

The Julia and Mandelbrot sets are two of the most famous fractals, known for their intricate and endlessly repeating patterns. These sets are created by repeatedly applying a mathematical formula to complex numbers. If the result of this process converges, the number is part of the set; if it diverges, it’s not. Both sets use similar formulas, but produce vastly different and visually stunning results:

- Julia Set Equation : z = z2 + c

- Mandelbrot Set Equation: z = z2 + c

A key difference between the Julia and Mandelbrot sets lies in the value of the constant ‘c.‘ In a Julia set, ‘c‘ remains fixed, while in a Mandelbrot set, it varies depending on the region being explored (typically within the range of -2.0 to 2.0 for both the real and imaginary parts). This makes ‘c‘ a scalar for Julia sets and a complex matrix for Mandelbrot sets.

The number of iterations required to accurately determine convergence or divergence depends on various factors, including the desired level of detail and the floating-point precision. While 100 iterations might suffice for basic visualization, the optimal number may vary. Points near the set’s boundaries often exhibit slower convergence or divergence rates compared to points further away. Therefore, the chosen convergence threshold can significantly impact the appearance of the set’s details.

Compute performance

Now that we know, how fractals are created,we can asses the required perfromance to compute them. Lets start with the set formula itsel. For each complex number multiplication (square root), a total of 4 multiply an 2 add operations are required ((A + Bi) x (C + Di) = (AC – BD) + (CB + AD)i). By taking into account “+c” (+2 for real and imaginary parts), we get 8 floating point operations per single pixel. For a FullHD resolution of 1920×1280 pixels, this yields 1920x1080x8 operations. By further taking into account the iteration count of 100, we get ~1.7 GFLOPS of required compute power. Now consider that rendering needs to be done in realtime for a video application at “lets say” 60 Hz for a standard PC monitor. The compute performance quickly jumps to 100GFLOPs (Giga Floating point operations per second). While modern processors are capable of computing at this speed, bear in mind, that this is the theoretical minimum value as additional operations will be required around.

Thanks to the independent nature of pixel calculations (meaning each pixel can be computed separately without relying on the results of others), we can harness the parallel processing power of modern GPUs to rapidly generate and display the intricate patterns of the Julia and Mandelbrot sets:

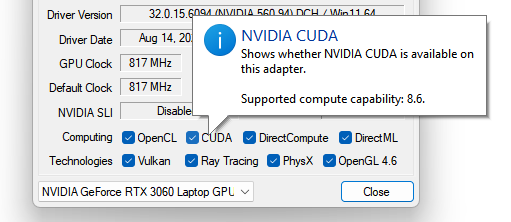

In order to launch the demo, ensure that your PC has a CUDA-capable device (nVidia GeForce / Quadro / RTX series). It might be required to also update your graphics drivers, which is highly recommended (Please go to nVidia.com, select, download and install the appropriate version of the drivers for your device). The following CUDA compute capabilities are supported: [5.0 | 5.2 | 5.3 | 6.0 | 6.1 | 6.2 | 7.0 | 7.2 | 7.5 | 8.0 | 8.6 | 8.7 | 8.9 | 9.0]. You may use the free GPU-Z utility to find out of you device supported. Only 64-bit Windows 10 and Windows 11 are supported.

CUDA Compute Kernel

/****************************************************************

* @brief Main Julia Kernel

* @param Plane: Julia Function Values

* @param C: Custom Julia Set Complex Constant

* @param Iterations: Amount of iterations processed for each point

* @param Threshold: Threshold for computing

* @param NumIter: Amount of Iterations per kernel call

* @param Nx X Dimensioon of the Grid

* @param Ny Y Dimension of the Grid

*****************************************************************/

__global__ void JuliaKernel(cufftComplex* Plane, cufftComplex C, int* Iterations, float Threshold, int NumIter, int Nx, int Ny) {

int tix = threadIdx.x + blockIdx.x * blockDim.x;

int tiy = threadIdx.y + blockIdx.y * blockDim.y;

if (tix < Nx && tiy < Ny) {

int tid = tix + tiy * Nx;

// Load from global memory

cufftComplex LA = Plane[tid];

int IterProcessed = Iterations[tid];

// Chunk Iteration Processing Loop

for (unsigned int i = 0; i < NumIter; i++) {

cufftComplex Res = LA * LA + C;

cufftReal Absolute = abs(Res);

if (Absolute < Threshold) {

LA = Res;

IterProcessed++;

}

}

// Upload to Global Memory

Plane[tid] = LA;

Iterations[tid] = IterProcessed;

}

}The Mandelbrot computing kernel is very similar to the Julia computing kernel due to the similarity between their definitions. The only important thing to note here is that it might take some time for the kernel to finish its work and on some systems, you might encounter the nVidia driver to crash – but this is only due to the fact, that the driver thinks that the kernel is frozen (Even though it is intensively computing the fractal set). The problem is generally known as TDR (Timeout Detection and Recovery).

In order to avoid problems with this mechanism, all the required iterations are processed in chunks (IE instead of computing all iterations per a single kernel call, only some smaller portion of those iterations are processed per a single kernel call). As an example 100 iterations might be divided between 2 kernel calls each of 50 iterations. This generally known as “splitting technique”. As a result, the kernel will finish its work before the driver will reset the device. This problem have been addressed on nVidia Pascal and newer GPU cards by introduction of the Compute Preemption feature.

The older Matlab-Based project that still support CPU computing can be downloaded ➡️ HERE ⬅️ and contains scripts for visualization and also the Microsoft VS 2019 project targeted for CUDA 10.2 toolkit (Devices with compute capability 6.1 – IE. GeForce 10xx). The computation type can be selected during initialization, look for “UseCPU” if you don’t want co compile Matlab mex files (Or you cannot). Note that computing via CPU in Matlab is slow (Even if matrix operations are applied) and that some code used there will become obsolete in upcomming Matlab releases.