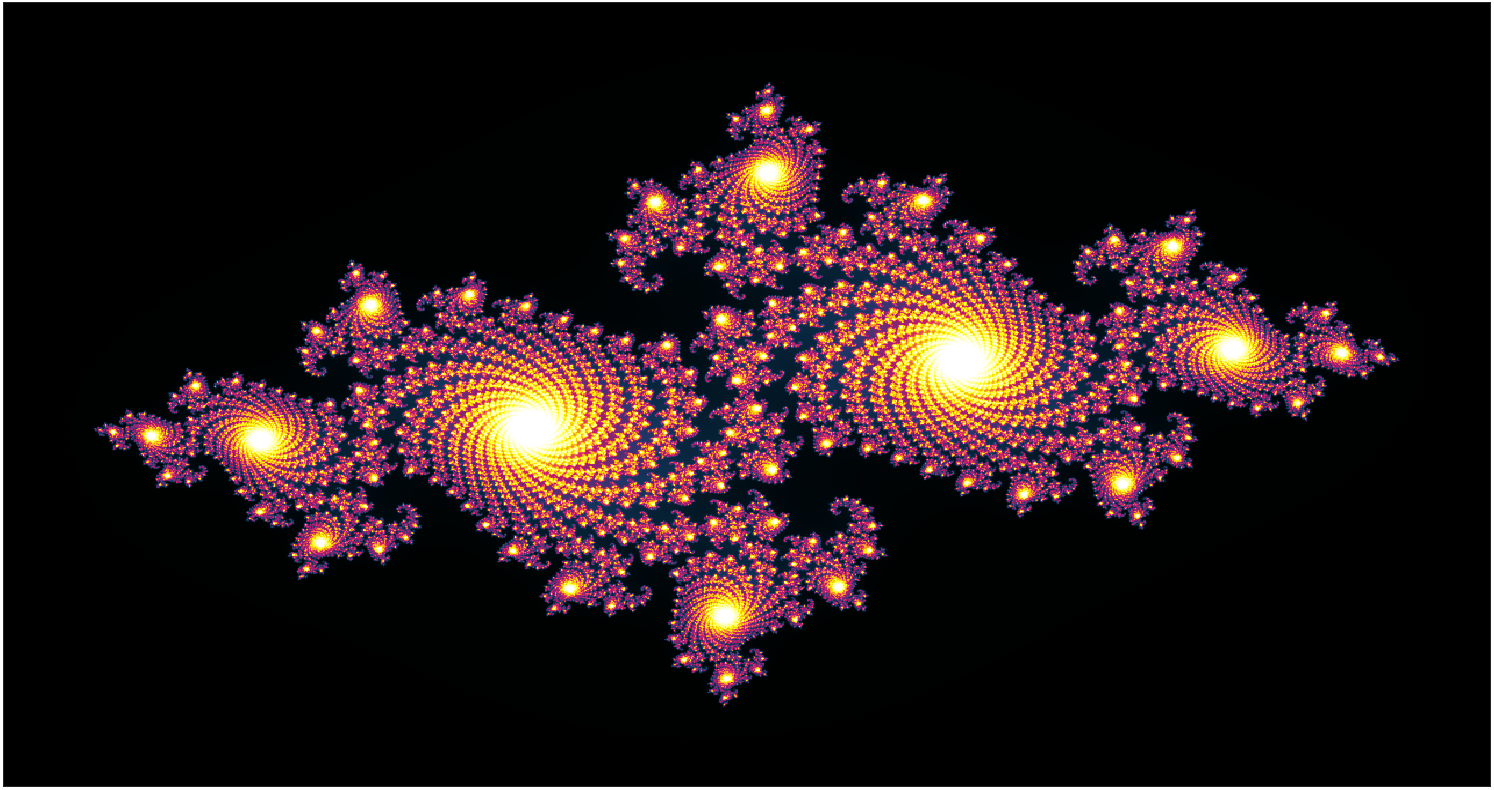

Both the Julia set and the Mandelbrot set are among the most famous fractals sets and it is likely,that you have seen some images of those sets already. They are basically endlessly – repeating patterns in complex plane. To see whether a point from the complex plane lies in a fractal set or not, one has to compute an equation over and over again and see whether it converge or diverge. If for the given point the equation does converge, the point lies in the set. If it diverges, then it doesn’t. Both sets use the same formulas:

- Julia Set Equation: z = z2 +c

- Mandelbrot Set Equation: z = z2 + c

The main difference is however that for a Julia set, the c – constant is a scalar value while for the mandelbrot set, it changes based on the area of interest ( Usually X → [-2,2] and Y → [-2 , 2] ) and therefore is generally a matrix (Complex Matrix). Amount of iterations of the above equations should be “high enough” to properly decode the divergence / convergence criterion. I did not estimated the right amount of iterations, although ~100 seemed just about legal to show the sets properly. The value might be however dependent upon the complex plane discretization and/or floating point precision. The second important parameter is the convergence/divergence threshold. IE, if the function value is 100, is the function considered to be diverging or not? The general rule is that some points close to set’s borders will converge/diverge slower that other points, which are far away from the set. As such, this parameter will mostly affect the set’s borders.

The reason why I have created this post is however that computing these sets is extremely costly operation. Lets say we want to visualize the Julia set in FullHD resolution (1920 x 1080 pixels). We will choose 100 iterations. Note that all computations are complex. As such, (A + Bi) x (C + Di) = (AC – BD) + (CB + AD)i. IE 4 floating point multiply operations and 2 floating point additions, or more precisely 4 additions (by incorporation the complex constant). As a result, the computation of the set will require : 1920 x 1080 x 100 x ( 4 + 4) floating point operations. Whats more, this task is perfectly suited for acceleration by GPU architecture:

/****************************************************************

* @brief Main Julia Kernel

* @param Plane: Julia Function Values

* @param C: Custom Julia Set Complex Constant

* @param Iterations: Amount of iterations processed for each point

* @param Threshold: Threshold for computing

* @param NumIter: Amount of Iterations per kernel call

* @param Nx X Dimensioon of the Grid

* @param Ny Y Dimension of the Grid

*****************************************************************/

__global__ void JuliaKernel(cufftComplex* Plane, cufftComplex C, int* Iterations, float Threshold, int NumIter, int Nx, int Ny) {

int tix = threadIdx.x + blockIdx.x * blockDim.x;

int tiy = threadIdx.y + blockIdx.y * blockDim.y;

if (tix < Nx && tiy < Ny) {

int tid = tix + tiy * Nx;

// Load from global memory

cufftComplex LA = Plane[tid];

int IterProcessed = Iterations[tid];

// Chunk Iteration Processing Loop

for (unsigned int i = 0; i < NumIter; i++) {

cufftComplex Res = LA * LA + C;

cufftReal Absolute = abs(Res);

if (Absolute < Threshold) {

LA = Res;

IterProcessed++;

}

}

// Upload to Global Memory

Plane[tid] = LA;

Iterations[tid] = IterProcessed;

}

}The mandelbrot computing kernel is very similar due to the definition. The only important thing to note here is that it might really take some time for the kernel to finish the work and on some systems, you might encounter the nVidia driver to crash – but this is only due to the fact, that the driver thinks that the kernel is frozen (Even though it is computing the Julia set). The problem is generally known as TDR (Timeout Detection and Recovery). In order to avoid problems with this mechanism, all the required iterations are processed in chunks (IE instead of computing ALL iterations per a single kernel call, only some smaller portion of those iterations are processed per a single kernel call). As a result, the kernel will finish in time. This problem have been addressed on nVidia Pascal and newer GPU cards by introduction of the Compute Preemption feature.

The entire project can be downloaded ➡️ HERE ⬅️ and contains Matlab scripts for visualization and also the Microsoft VS 2019 project targeted for CUDA 10.2 (Devices with compute capability 6.1 – IE. GeForce 10xx). The computation type can be selected during initialization, look for “UseCPU” if you don’t want co compile Matlab mex files (Or you cannot). Note that computing via CPU is slow (Even if matrix operations are applied).